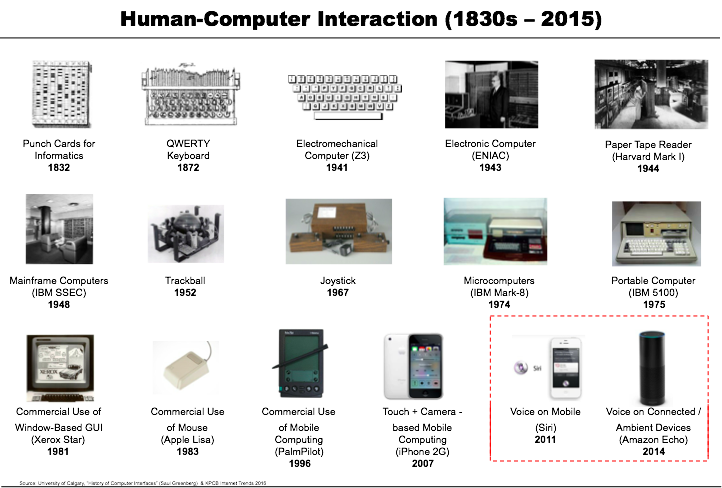

Millions of years ago, we started with SOUND (voice), and soon came the WORD (text).

90% of all human communication still happens through voice.

The keyboard trumped the punch card. The mouse coexisted with the keyboard. The touchscreen made redundant the mechanical keyboard. However, 90% of human communication still happens through voice because it is natural. Unfortunately, technology progress took the time to catch up with it.

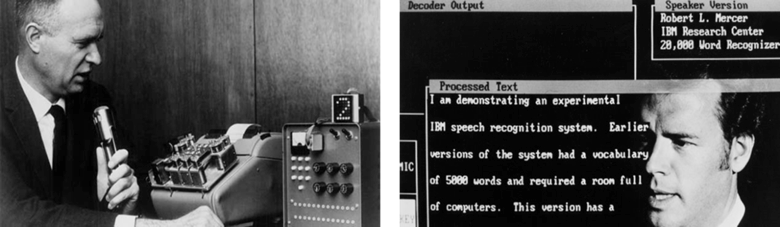

There were several attempts at building perfect voice machines. In the 1960s, IBM unveiled an early voice recognition system called Shoebox. The machine could do simple math in response to voice commands, recognizing just 16 words.

In the ’80s, the vocabulary of IBM’s speech recognition system expanded from 5,000 to 20,000 words. However, the experience still felt as awkward as it did for one Montgomery Scott of the USS Enterprise.

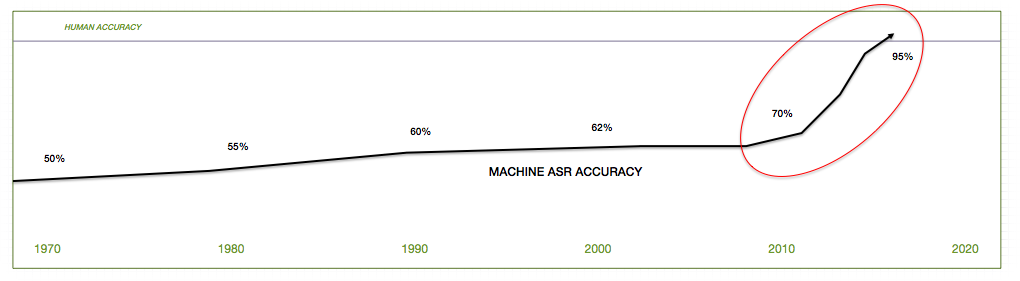

PART-I: Rapid Advancement in ASR Accuracy Levels

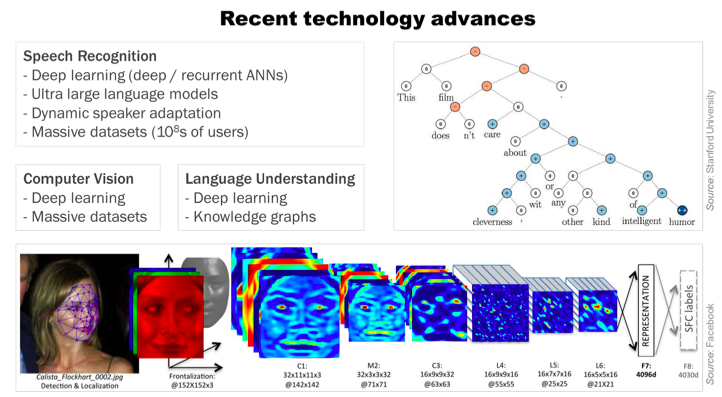

Automatic Speech Recognition (ASR) is the ability of a machine to recognize spoken words. The progress was slow because ASR accuracy levels were low compared to human-level accuracy. Then, sometime in 2010, the inflection point in ASR accuracy was reached.

The chart above shows that between 2010–15, the advancements in speech recognition accuracy surpassed everything that happened in the prior 30 to 40 years. In fact, today we are at the threshold where machine ASR will soon surpass human speech recognition.

Human-like ASR accuracy is achievable now for the first time since the dawn of AI.

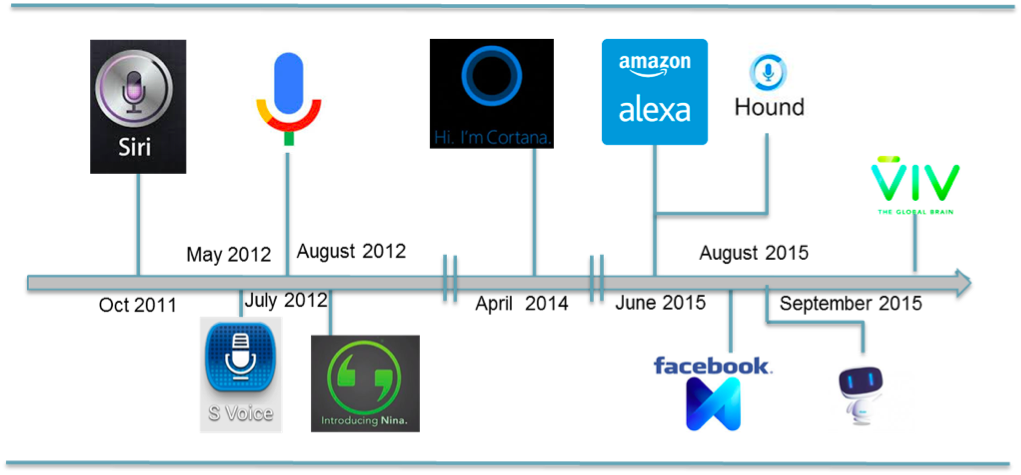

Improving accuracy led to the first wave (2011–2013) of voice-based assistants- SIRI, Google Now, S Voice, and Nina — which came with some limitations of Speech and Natural Language Understanding (NLU).

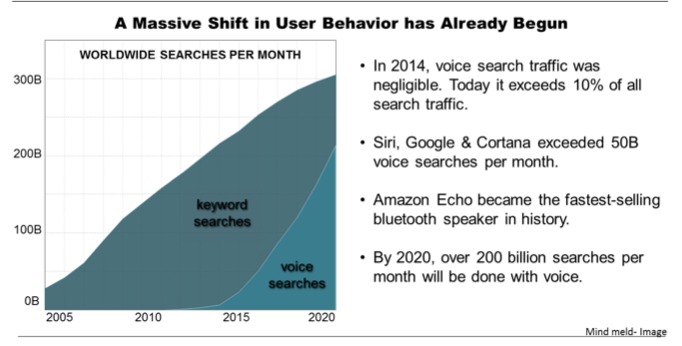

Like many other applications, in the initial years, these assistants failed to live up to users’ expectations and kept delivering worthless search results. However as the technology improved and users learned how to talk to their devices, voice-driven offerings increased, leading to a massive shift in user behavior.

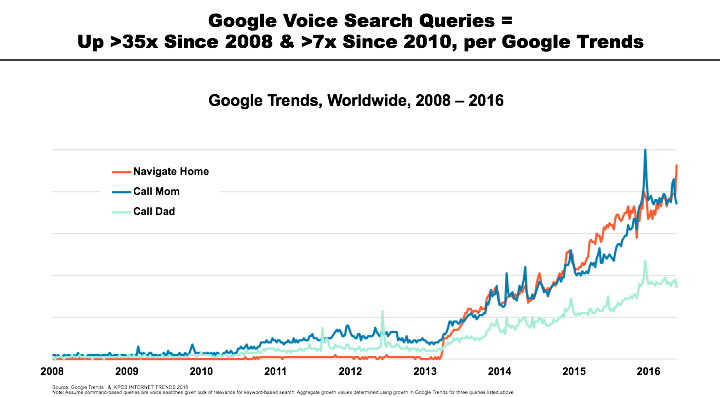

Massive Shift in User Behavior — “Huge Spike in Voice Search and Related Commands”

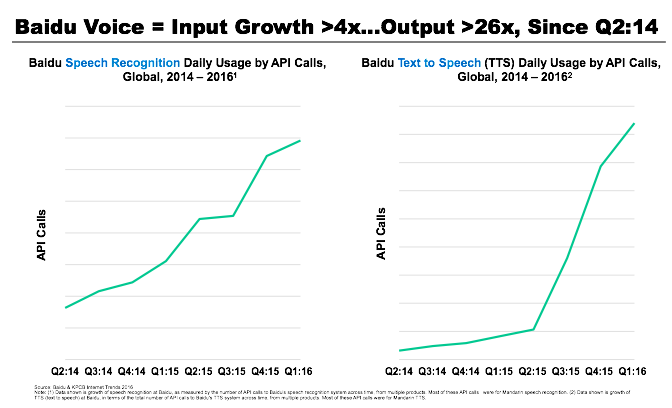

‘Voice first’ trend is more evident on some Asian platforms for e.g. Baidu. Just walk down any busy street in Shenzhen, China and you’ll notice people utilize their phones like walkie-talkies. It’s easier and faster to do a ‘voice-search’ or ‘leave a quick voice message’ than it is to type out a Chinese text message on a small mobile keyboard.

Things that were very challenging and expensive five years ago are now becoming mainstream. In the last two years alone, there is a huge spike in people using voice to access content and navigate with their devices. The cause of this shift is very simple: human nature.

Humans are innately tuned to converse with others. It’s how we share knowledge, emotions, and organize ourselves. Voice has been part of our makeup for hundreds of thousands of years.

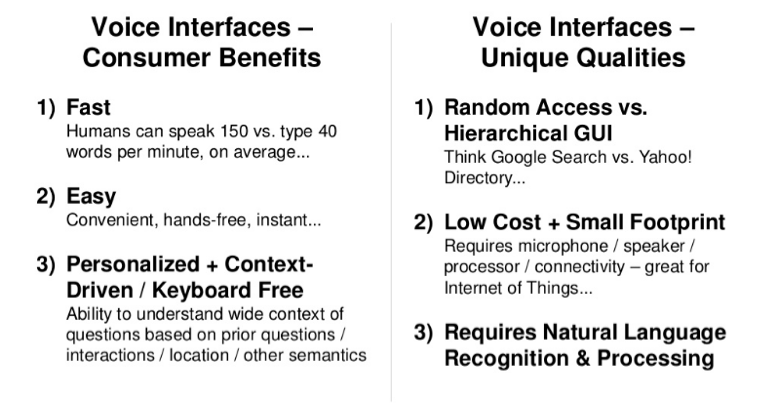

Voice is the Most Efficient Form of Computing Input

The global ubiquity of smartphones has given us a world of information — and answers — at our fingertips. But why take the time to type a question into Google when we can ask out loud and have an answer in seconds?

Voice interfaces allow users to ask questions, receive answers and even accomplish complex tasks in both the digital and physical world through natural dialogue.

The above shift combined with rapid tech advances has led to a massive surge in voice-based intelligent assistants and platforms. I wrote about it here.

Voice is the Next Frontier, and It is Getting Crowded

In Wave –I (2011–13) of voice-based intelligent assistants we saw Voicebox, SIRI, Google Now, S Voice, and Nina; and in Wave-II (2014 to the present), we saw the launch of Microsoft’s Cortona, Amazon’s Echo, Hound, Google Home, VIV, Facebook’s M, and Jibo.

All this progress and rapid adoption leads to a whole new set of open questions. “Is voice the next frontier? What role does design play in creating one of these experiences? And, most importantly, what happens when our devices finally begin to understand us better than we understand ourselves?”

Remember, thus far, all human progress has been about how humans interact with machines.

Before we try to answer that, let’s refer to an important thread in technology history — The Transfer of Knowledge.

Transfer of Knowledge and Machine 2 Machine Communication

The transfer of knowledge, thus far, has evolved in three paradigms — human to human (past), human to machine (present), and machine to machine (future). For the first time in our history, the new transfer of knowledge will not involve humans.

Advancements in the Internet of Things (IoT), Artificial Intelligence (AI), and robotics are ensuring that the new transfer of knowledge and skills won’t be to humans at all. It will be direct, machine to machine (M2M).

M2M refers to technology that enables networked devices to exchange information and perform actions without the manual assistance of humans, like Amazon Echo ordering an Uber or placing an order on Amazon — all with a simple command.

We’re entering the age of intelligent applications, which are purpose-built and informed by contextual signals like location, hardware sensors, previous usage, and predictive computation. They respond to us when we need them to and represent a new way of interacting with our devices.

Featured CBM: Conversational Bots and the Future of APIs

Connected Devices are Changing Everything

Voice bots like Alexa, Siri, Cortana, and Google Now embed search-like capabilities directly into the operating system. By 2020, it is expected that more than 200 billion searches will be via voice. In four years, there will be 3.5 billion computing devices with microphones, and fewer than 5% will have keyboards, thereby impacting homes, cars, commerce, banking, education, and every other sphere of human interaction.

Amazon Echo is a voice-controlled device that reads audiobooks and news, plays music, answers questions, reports traffic and weather, gives info on local businesses, provides sports scores and schedules, control lights, switches and thermostats, orders an Uber or Domino’s, and more using the Alexa Voice Service

Other intelligent platforms including Google Now and Microsoft’s Cortana can easily help organize your lives — i.e. managing calendar, track packages, and check upcoming flights — like a virtual personal assistant.

Our increasing dependence on voice search and organizational platforms has proliferated the rise of machine-to-machine communication, and this will have a serious impact on the future trajectory of commerce, payments, and home devices.

It feels like we are currently at the crossroads of search. With the growth of messaging apps, there is the argument that the future of search & Commerce will sit within messaging apps with Chatbots as personal assistants (for example, Facebook M and Google Assistant). Whether it is voice controlled, Chatbots or both, search & commerce will certainly not be the same in the next five years.

PART-II: What the Future Holds?

Advanced voice technology will soon be ubiquitous, as natural and intelligent UI integrates seamlessly into our daily lives.

Within the next four years, 50% of all searches are going to be either images or speech.

Human-Voice will be a primary interface for the smart and connected home, providing a natural means to communicate with kitchen appliances, alarm systems, sound systems, lights, and more. More and more new-age cars manufacturers will adopt intelligent, voice-driven systems for entertainment and location-based search, keeping drivers’ and passengers’ eyes and hands-free. Audio and video entertainment systems will be programmed on naturally spoken voice for content discovery. Voice-controlled devices will also dominate workplaces that require hands-free mobility such as hospitals, warehouses, laboratories, and production plants.

After 40-odd years of development, voice recognition has nearly reached its zenith. I.A.’s can now effectively recognize speech. With groundbreaking advancements in artificial intelligence, we are finally overcoming these formerly insurmountable challenges by training and adapting systems through machine learning.

It's all about Natural Language Understanding (NLU)

Natural language processing, the field of computing and AI concerned with making computers understand and speak the natural languages of humans, has come a long way in recent years.

Once the stuff of science fiction, recent advances in language understanding, machine learning, computer vision, and speech recognition have made voice interfaces far more practical, making it easier to communicate with the devices around us.

I wrote earlier about some UI trends and how we are fast getting into Zero UI environment here.

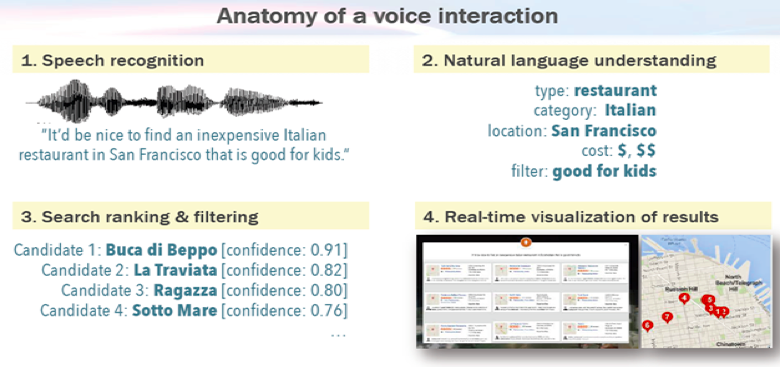

Anatomy of a Voice Interaction and NLU

For every application, building a voice interaction and NLU model is a structured process, which involves:

1) Creating a custom knowledge graph from the database or website.

2) Training NLU models to understand and interpret queries.

3) Optimizing machine learning algorithms to identify and perform the correct action.

4) Integrating into client applications on all major platforms.

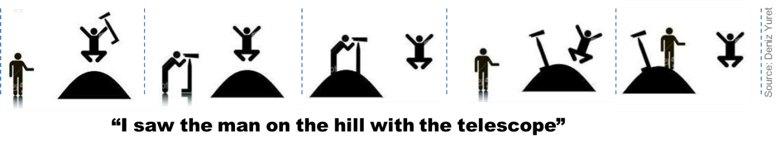

The breadth of data and context also poses a challenge to delivering high-level natural language understanding. For instance, “I saw the man on the hill with the telescope” can mean any of these.

Every knowledge domain requires that NLU systems not only recognize new and specialized terminology but also gain an understanding of ‘how meanings of words’ shift in a new context.

When perfected, leads to a very superlative experience.

Voice-First World and the Age of the Machine Man

To paraphrase William Gibson, the future is already here and, thanks to intelligent assistants (IA), it is more evenly distributed than ever before. Based on our spoken input, assistants like Alexa, Siri, and Google Now answer questions, navigate routes and organize meetings. Alexa can also order pizza, hail an Uber, or complete an order from Amazon.

Machines are learning to take over our digital lives using our voice. We’re also finding that we are more comfortable communicating with devices through the spoken word.

“The ultimate destination for the voice interface will be an autonomous humanoid robotic system that very much like a science fiction movie, will fundamentally interact with us via voice.” — Brian Roemmele

Voice Commerce for the Cognitive Era

Voice Commerce is still in the late novelty stage as far as implementation is concerned. However, it is poised to bring about a paradigm shift for customers and other industries i.e. all advertisement dependent industries.

Amazon’s vision here is the most ambitious: to embed voice services in every possible device, thereby reducing the importance of the device, OS, and application layers; it’s no coincidence that those are also the layers in which Amazon is the weakest. The market potential is huge, and, it is no surprise that all the big tech companies are investing heavily in Voice and AI.

From Amazon’s standpoint, more important than being the hub of the smart home is continuing to expand its dominance in e-commerce. In March 2015, Amazon rolled out Dash Buttons, that allow consumers to reorder CPG items with the push of a button, and very recently activated Alexa to shop for Amazon Prime products.

Alexa now features more than 3,000 third-party skills. Here is a quick look at 50 things Alexa can do and an inside story about how Echo was was created.

Alexa is the fourth pillar of the Amazon business model. Here get a feel of “Voice Shopping with Alexa”

CBM Feature: Here’s Every Amazon Alexa Project at the TechCrunch Disrupt Hackathon This Year

Voice Banking & Payments –Facilitating Frictionless Commerce

Payment is the most important element in a commerce transaction. Hence, logically, voice payment is a key functionality required in every voice assistant’s core skill-set.

Capital One announced recently the rollout of a new skill, which allows consumers to do their banking by voice, including checking balances, reviewing transactions, making payments, and more. For example, Capital One customers could ask Alexa questions like, “Alexa, What is my Quicksilver Card balance?” or “Alexa, ask Capital One to pay my credit card bill.” Alexa uses pre-linked funds to pay the bill and can pull up account information and reply to the questions for other items. Alexa can already pay for your Uber or order pizza.

Earlier this year, Microsoft Cortana was integrated with Paytm Wallet. Paytm customers can now pay their utility bills and make mobile transactions using a Cortana enabled smartphone.

Recently, Apple’s Siri also got some impressive upgrades, including the ability to make payments using Square Cash, Venmo, and number26.

Voice payments and commerce seem to be the next logical step for everyone. However, some players, like Amazon, are a better fit for the task than others.

Voice — Reinventing Home Entertainment

The way we interact with our devices at work and home is constantly changing. Not too far in the future, the ‘voice’ will be the primary means of interacting with technology in the home. Voice solves the issue of increasing device functionality without adding cumbersome buttons and displays to streamlined designs.

For example, in the connected home of the future, an efficient voice interface will eliminate light switches, appliance buttons, remote controls, along with any task that requires you to grab your phone and perform a quick search. As consumers see the capabilities, the market demand for these products will skyrocket.

Viewers can ask their TV, remote control, or set-top box to search for programs, movie titles, actors and actresses, favorite genres, particular sports and virtually any other category of preferred content.

Furthermore, Voice Biometrics also personalize services where different members of a household can be identified by their voice and instantly have access to individual custom home screens, commonly-searched-for content, recently-viewed and personal web applications like social media feed.

All the major tech companies - Facebook, Google, Microsoft, Apple, Yahoo, Baidu, and Amazon — are centering their strategy around natural-language user interactions and believe that this is where the future of human-computer interaction is heading.

The Next Big Step Will Be for the Very Concept of the Device to Fade Away

Most high-end smartphones now have a voice assistant built into them. The technology has evolved from pure voice recognition to artificial intelligence (AI), which can understand human language, analyze the content, and respond accordingly. It is reasonable to expect that in five years, all smartphones, TVs, PCs, tablets, GPS, and game consoles will come with a voice assistant. A fair percentage of cars, high-end appliances and toys will also have this technology built in.

“The next big step will be for the very concept of the “device” to fade away. Over time, the computer itself — whatever its form factor — will be an intelligent assistant helping you through your day. We will move from mobile first to an AI first world.” — Google CEO Sundar Pichai

Voice might seem mostly a novelty today, but in technology, the next big thing often starts out looking that way.

As Brian writes- ‘The computer as we know it has been shrinking and, in many ways, will disappear and become a nexus connecting us via speech. There will still be touch screens and VR headsets, perhaps even ephemeral holographic displays in the next ten years. However, voice interfaces will continue to grow and supplement these experiences.”

Most AI experts agree that applications demonstrating a human-like understanding of language are a possibility today for the first time.

“AI interfaces — which in most cases will mean voice interfaces — Could become the master routers of the internet economic loop, rendering many of the other layers interchangeable…” - Chris Dixon

Keyboard-less smart home devices are rapidly growing in popularity, which requires voice interfaces for all user interactions — i.e. no more typing, swiping or searching.

The user interface of the future tech devices and appliances will be the one that you can’t see or touch. Voice recognition, voice commands, and audio engagement will be the de facto way to interact with technology. And when all that happens —